Let’s continue the conversation about PLM and AI that started last week. Artificial Intelligence (AI) and Chat GPT have been trending topics for the last 100 days triggering many questions and discussions across diverse groups of people and businesses. It generated substantial human feedback from the tech introduced by Open AI and other companies. What we have seen is how machine learning can fundamentally transform the way we live, work, and interact with the world around us. It is also a big opportunity for businesses to change their processes and decision-making. One of the most exciting developments in AI has been the emergence of Large Language Models (LLMs), such as GPT-3.5, which have the ability to generate human-like text and respond to natural language input in unprecedented ways. As LLMs continue to improve and evolve, their potential applications are expanding rapidly, from language translation and chatbots to content creation and even code generation.

I’ve been following discussions and research on the potential intersection between GPT, LLMs, and Product Lifecycle Management (PLM), which could revolutionize the way products are designed, developed, and brought to market. Check my earlier articles:

Exploring the potential impact of GPT-3 on product lifecycle management (PLM)

Will PLM vendors unlock the potential of AI for industrial companies?

PLM and Digital Thread (part 5 – final)

In this blog, we will explore the latest developments in AI and LLMs, and examine how they may impact the future of PLM. We will discuss potential use cases for LLMs in the product development process, as well as the challenges and opportunities that arise from integrating these technologies into existing PLM systems.

AI and GPT for Dummies

Let’s discuss what are AI and GPT. Artificial intelligence (AI) is the ability of machines to perform some cognitive tasks that usually require humans. One of the most popular AI models used today is the Generative Pre-trained Transformer (GPT) model. GPT is a deep learning model that has been pre-trained on a large corpus of text data and can generate human-like language. The model is based on a transformer architecture, which allows it to process input text and generate output text with a high level of accuracy. GPT is commonly used for natural language processing (NLP) tasks such as chatbots, language translation, and text summarization. In summary, AI and GPT are powerful technologies that are transforming the way we interact with machines and each other.

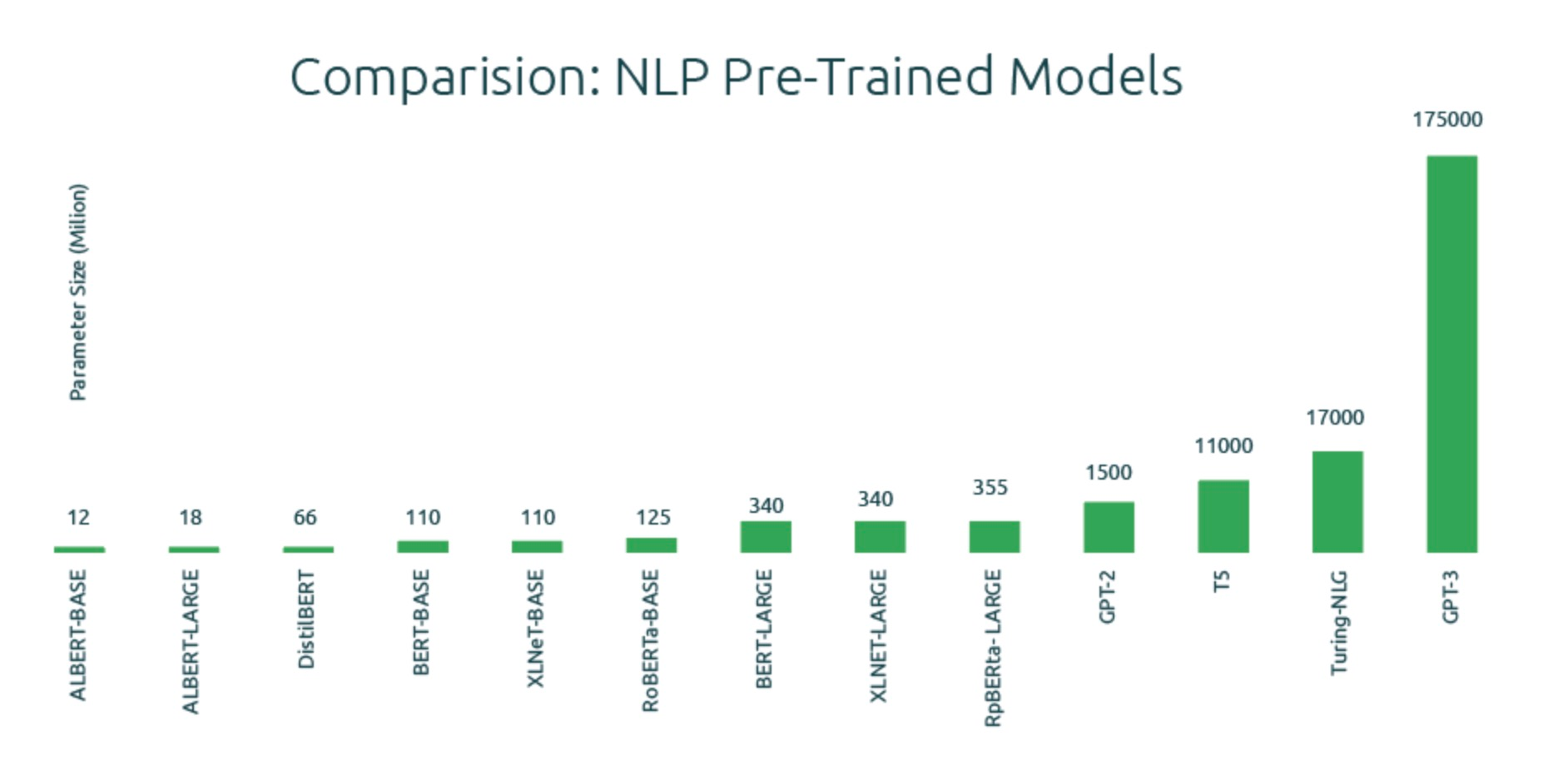

It is impressive to see the scale achieved by GPT3 – 1000x in 2 years. The astonished computational muscle power applied to generative AI for the past 5-6 decades. As you can see, a few years ago the power of the cloud and extraordinary new silicon advancements drove an almost straight-up increase in “parameters,” which are in a way the essential ingredients that make Large Language Models work.

The bigger the model, the better the performance. It is this wild acceleration in processing power that enabled the sudden and often-shocking advances in generative AI to happen. The recent development of GPT4 actually makes the slide above a bit outdated.

These LLMs have proven to be the most expensive tech I’ve seen recently. A model with hundreds of billions of parameters can be created and massive clusters of supercomputers can be used to power these LLMs. However, these capacities are far from reach for most enterprises (yet).

It is just sophisticated math! As much as we all believe that GPT3 “creates” something, in fact, it is not true. What we see in GPT3 (and ChatGPT application) is just math. These stunning performances and examples are not “created”- it is just a result of performing match power, probabilistic calculations, and remixing words from the model. Think about a huge computational model capable to calculate the prediction of the next word to assist humans in writing and answering questions. There are many examples, but the core fundamental is the math on the scale.

Who Will Build PLM Hybrid Large Language Models (LLMs)?

The neural network-based model is usually a large language model (LLM) that has been trained on massive amounts of data using deep learning techniques. This model is capable of generating high-quality, human-like text that can be used for a variety of NLP tasks, such as language translation, sentiment analysis, and text summarization.

There is a number of LLMs that have already been created to cover multiple fields. As such, GPT, BERT, RoBERTa, Codex, T5, XLNet, etc. There are also many other large language models that have been developed by various companies and research organizations, each with its own strengths and weaknesses.

Where things start to be very interesting is when multiple LLMs can be integrated. A hybrid large language model (LLM) is an artificial intelligence model that combines two or more different types of models to achieve better performance than each individual model on its own. In the context of natural language processing (NLP), a hybrid LLM typically combines a neural network-based model with other types of models, such as rule-based models or probabilistic models.

The other types of models that can be combined with the LLM include rule-based models and probabilistic models. Rule-based models use a set of rules to analyze and process text, while probabilistic models use statistical techniques to infer relationships and patterns in data. By combining these different types of models, hybrid LLMs can achieve better performance than traditional LLMs and can be used for a wider range of NLP tasks.

My belief in Generative AI and applications of LLM can be extended by developments in PLM space to provide specific models to serve engineering and product lifecycle management communities. These language models can focus on better capabilities of product lifecycle management, supply chain management, manufacturing models, and many others. To do this, PLM developers need to perform the following tasks:

- Identify use cases where generative AI is meaningfully

- Building on PLM-specific unique capabilities and data assets

- Using existing LLMs and developing unique models too.

- Develop a specific application that can be focused on AI use cases.

The Copilot Era

The term “copilot” is coming actively in the usage by the AI community and developers. AI copilots refer to the use of artificial intelligence (AI) systems to assist human pilots in carrying out their duties. These AI copilots can help pilots in a variety of ways, such as by providing real-time data analysis, alerting the pilots to potential hazards, and suggesting actions to avoid or mitigate those hazards.

As such generative design (copilot) function in CAD systems that became popular recently can find an optimal shape, forms, and structure of CAD model. Another example of a copilot system is GitHub Co-pilot allows you to use AI to autocomplete your code based on the experiences of other people and best practices. A system capable to analyse a large number of BOM structures and product information can predict a future optimal model for a new product.

AI copilots have the potential to improve the work of designers, engineers, manufacturing planners, and supply chain managers. However, to make it happen, software vendors will have to have large language models (LLMs) trained based on the data that can be useful. For example, Microsoft and Open AI created Codex LLM trained on a large number of open-source software code databases.

What is my conclusion?

The shock and awe triggered by generative AI over the past 100 days indicate that the industry will get huge potential but as with all new things in this business, it’s a work in progress, it’s subject to the specific use cases, economics, startup developing innovative products, existing vendors adopting new tech and industrial companies adopting the usage of new tech to benefits in the form of business outcomes.

Best, Oleg

Disclaimer: I’m co-founder and CEO of OpenBOM developing a global digital thread platform providing PDM, PLM, and ERP capabilities and new experience to manage product data and connect manufacturers, construction companies, and their supply chain networks. My opinion can be unintentionally biased.