The evolution of the PLM digital thread has created an ever-increasing need for enterprises to prioritize and better manage their data integration processes. As technologies evolve, so do the interconnectivity and the complexity of system interoperability that must be addressed to bring together multiple departments, systems, databases, and stakeholders into one global operation.

In my article today. I want to continue the discussion I started in my earlier articles about PLM and Digital Thread. Check them out first.

PLM and Digital Thread Evolution – Part 1 (Digital Thread Maturity Model)

PLM and Digital Thread Evolution – Part 2 (Files and IDs)

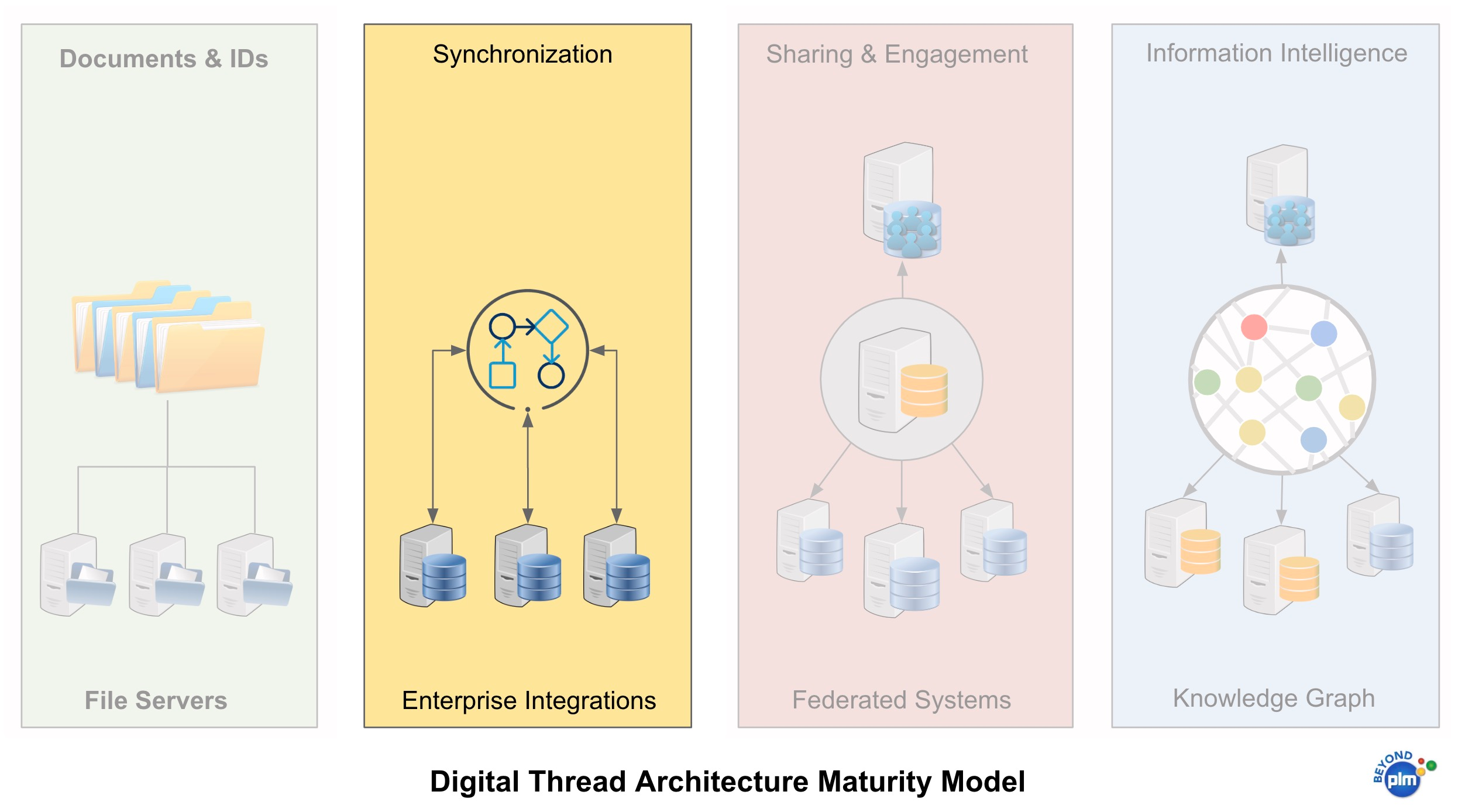

In the first article, I introduced you to the purpose of digital thread and shared my take on the four phases of digital thread development. I also proposed the maturity model for the digital thread that contains four levels – File Servers, Enterprise Integrations, Data Federation, and Knowledge Graph.

This is my Part 3 article and I want to talk about enterprise integrations.

The evolution of the PLM digital thread has created the need to integrate information. At the same time, the presence of data silos presented a real challenge of how to organize the information and make it available. To solve this problem, companies faced the real challenge- how to keep existing enterprise systems for computer-aided design (CAD) with product data management (PDM), product lifecycle management (PLM system), supply chain management (SCM), enterprise resource planning (ERP) and how to integrate the data in these systems for an entire product development process. The biggest challenge of the digital thread at this stage is how to keep up-to-date information and continues process management at the same time. From a business standpoint, each department (or business function in a product’s lifecycle) demands to have an access to the information they need. At the same time, each system holds the information and manages the consistency of the information.

As technologies evolve, so do the interconnectivity and complexity of system interoperability that must be addressed to bring together multiple departments, systems, databases, and stakeholders into one global operation.

Data Sync (DIY or application based)

Every organization faces the problem of data integration. The most natural way of thinking for many organizations as they think about “application” first, is “how to sync data from one system to another”. Data synchronization is a low-hanging fruit in any organization from very small to large enterprises. It is usually the task assigned to IT people and service organizations. It is easy because it doesn’t require changes in the flow of the existing application and doesn’t disturb application workflows.

The technologies for data synchronization vary but usually start at a very low level by extracting data to simple formats (CSV, XML, JSON) or just exporting Excel files from one system and importing it to another system. The justification behind these types of integration usually goes to business process needs. Here is some example of the questions companies are asking to start their data synchronization activities:

- How to send a BOM from a CAD system to ERP

- How to get stock and price information from the ERP system in the PDM system

- How to release documents and send them to the MES system for production planning

All these activities are using a system of identification (we discussed it in part 2 – Files and IDs) and brutally syncing data. The operations can happen on demand on using schedulers (eg. every 24 hours). The technology for such integrations can vary from brutal data access using SQL databases or more standard APIs available in all PLM solutions. The main negative side of direct application integration is that such a product lifecycle management approach is very quickly ending in messy business processes and low data quality. As companies are moving and advancing, it is becoming very hard to support the continuity of digital thread and product lifecycle.

Enterprise Integration Technologies

Moving from direct data sync, coming technology for enterprise application integrations. These products were evolving for the last 20-25 years. First introduced as EAI (enterprise application integration), the technologies advanced into ESB (enterprise service bus) in a variety of forms leveraging cloud and web technologies to make integration easier. To provide a competitive advantage to integrations, these “integration platforms” offered multiple tools how to orchestrate digital thread synchronization using a variety of tools and techniques such as data mapping, event processing, data workflows, and many others. The most advanced products and platforms in this group started to focus on how to distribute the data across the product value chain and support the entire product life cycle across multiple organizations and PLM, ERP, and other enterprise solutions.

How to Get Beyond “Sync”

As much as technologies offered by different integration solutions are appealing to keep data flow synchronized, this “sync” approach has a few significant disadvantages – complexity of implementation, real-time data access, and data fragmentation. Also, as an outcome of these factors, systems often struggle to organize a desired user experience because of the complexity of data processing, availability, and formats. The complexity of the data in a digital thread is super high and therefore user experience is not only “nice to have”, but also a matter of survival. Think about some tasks in enterprise organization in product lifecycle management tools such as change impact analysis, BOM comparison, and cost analysis. These tasks (and many others) can benefit from the data availability and modern user experience, yet cannot be easily achieved by pumping data between different systems and functions. This obviously brings us to the next level of data integration and digital thread maturity model – data sharing and federation.

What is my conclusion?

A growing number of enterprise applications such as product lifecycle management (PLM), enterprise resource planning (ERP), manufacturing execution systems (MES), supply chain management (SCM), and many others created a situation when companies were forced to support a variety of data synchronization and integration tools. Started as a simple application export/import and later developed as a more sophisticated data processing tool, these integration technologies and platforms are pumping data across multiple systems in a single company or extended enterprise to ensure that people using applications will get access to the information. The organization of these integration methods can vary, but in general, all of them rely on some sort of semantic mapping (eg. Part Numbers) and sync of data and files between multiple systems. Just my thoughts…

PS. This is the second phase in digital thread evolution and it raises the question about the next step. Wait for my Digital Thread Evolution – Part 4.

Best, Oleg

Disclaimer: I’m co-founder and CEO of OpenBOM developing a digital cloud-native PDM & PLM platform that manages product data and connects manufacturers, construction companies, and their supply chain networks. My opinion can be unintentionally biased.